This is a project that I’ve been working on during my internship at the Airlab since May 2018. The goal of this sensor pod is to achieve high accuracy 3D reconstruction using a pair of high resolution XIMEA stereo cameras and a Velodyne LiDAR. The device can also provide thermal information using the onboard FLIR thermal camera.

Hardware Overview

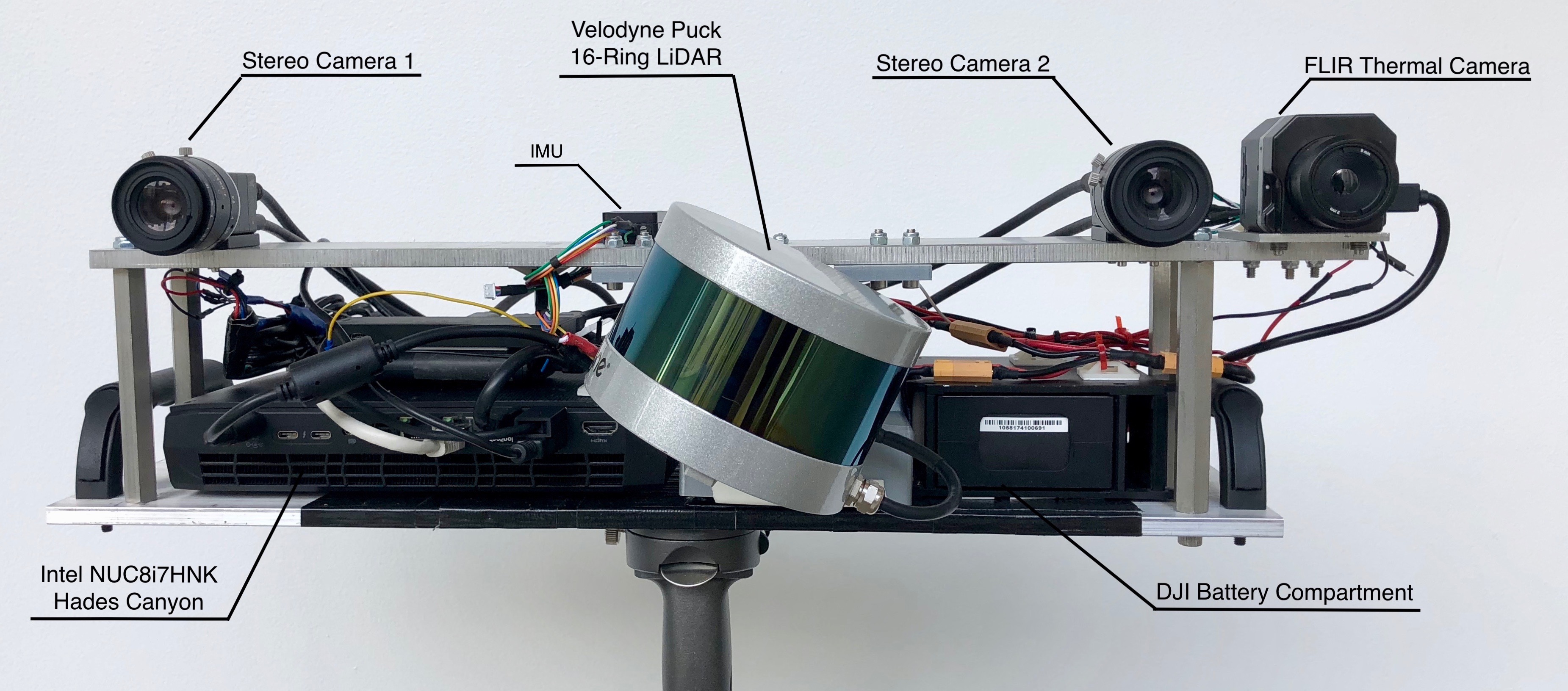

As shown in the picture, the sensor pod consists of a set of sensors (stereo/thermal cameras, LiDAR, IMU), an Intel NUC onboard computer, a DJI battery as power supply, and other components (two voltage converters, two USB hubs, and a teensy for sensor triggering).

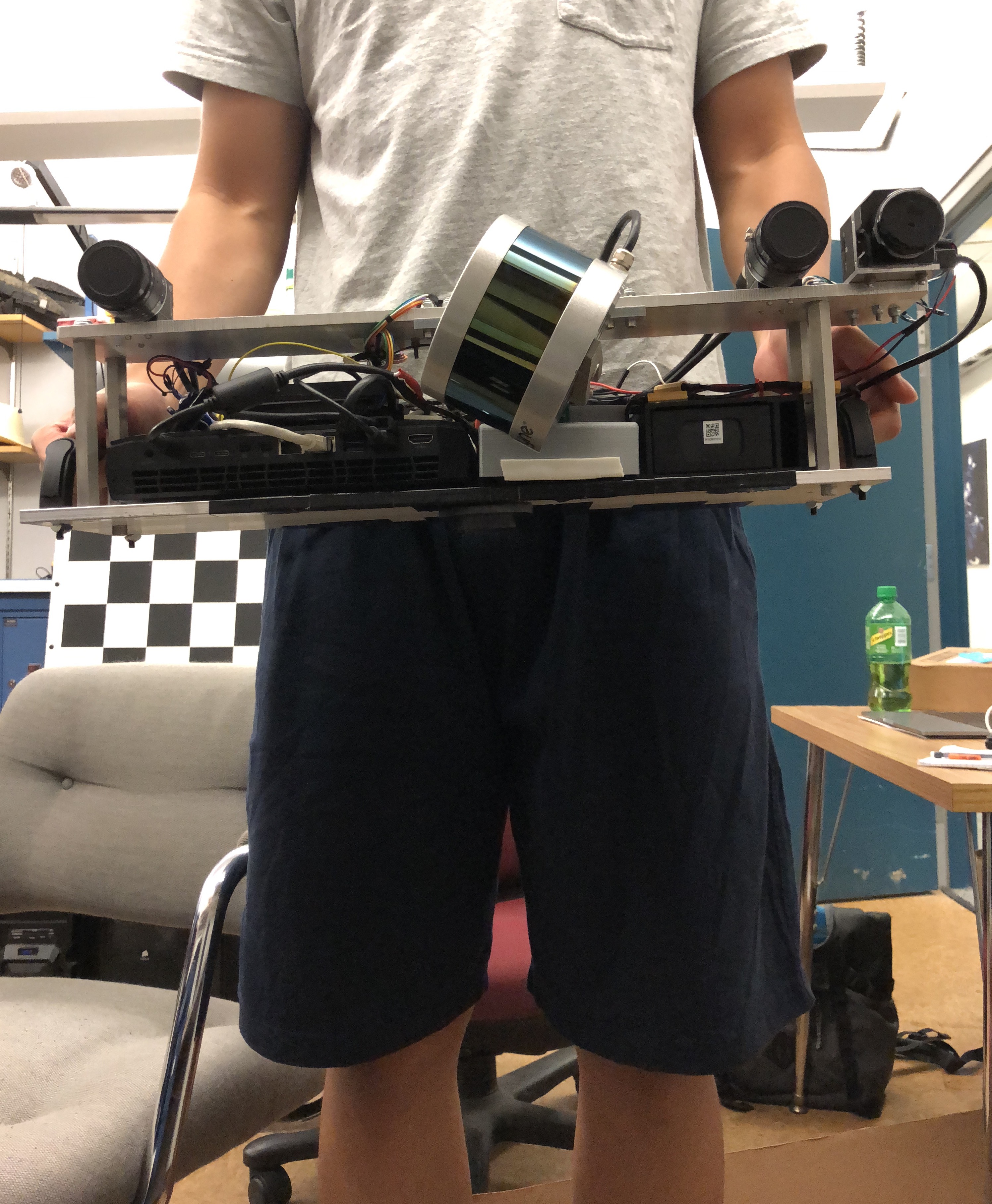

All the sensors are mounted on a single aluminum sheet to ensure no undesired displacement will happen between sensors when operating. The device can be used both hand-held or mounted on a tripod.

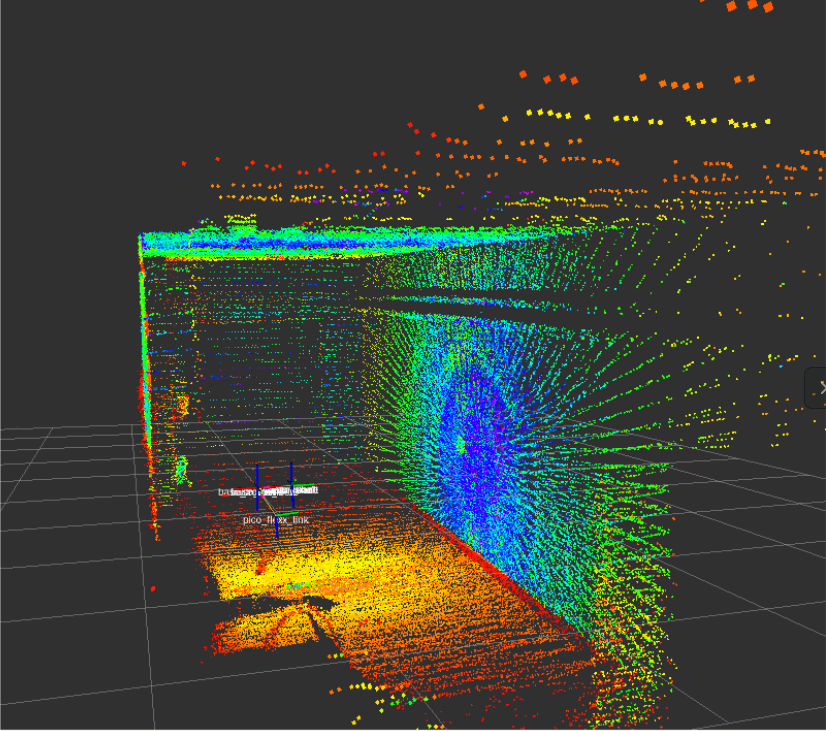

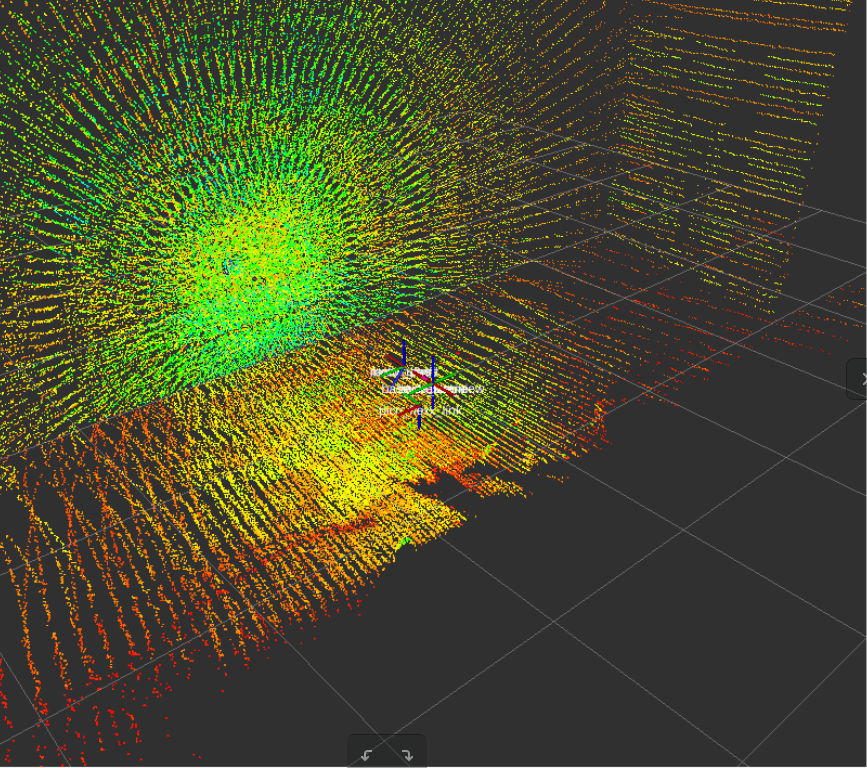

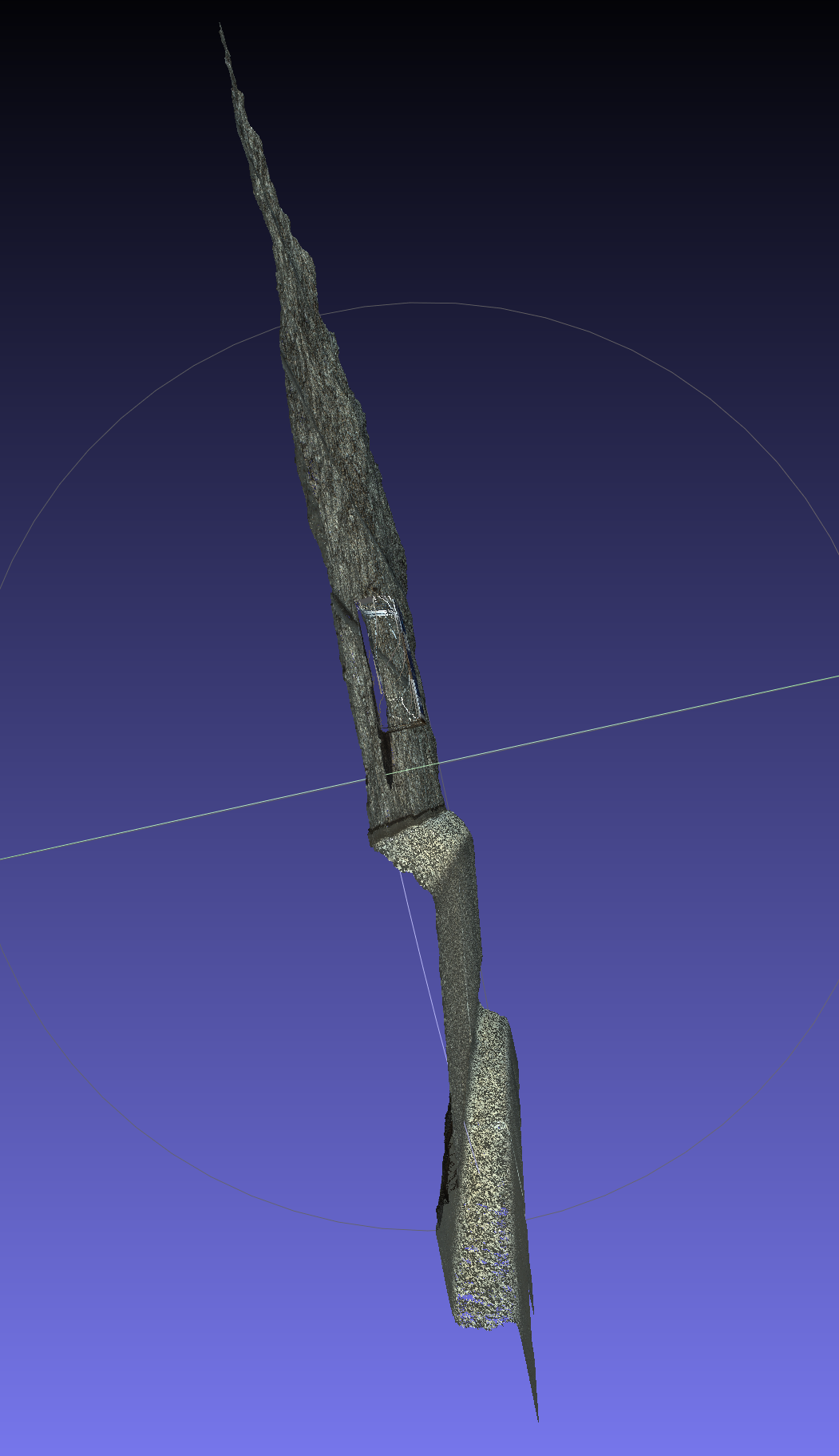

The Velodyne LiDAR is connected to a g2 customized motor, allowing the LiDAR to externally rotate. Combining the encoder data from the motor and the Velodyne sensor data points, we can generate a denser point cloud that covers the entire 3D space.

Algorithms Overview

With the stereo images as data, we can find corresponding feature points in both images and construct the disparity map for a pair of images. Combining the disparity map and the camera parameters (both intrinsic and extrinsic parameters), we can compute the depth map of the target, and then construct the point cloud from the feature points.

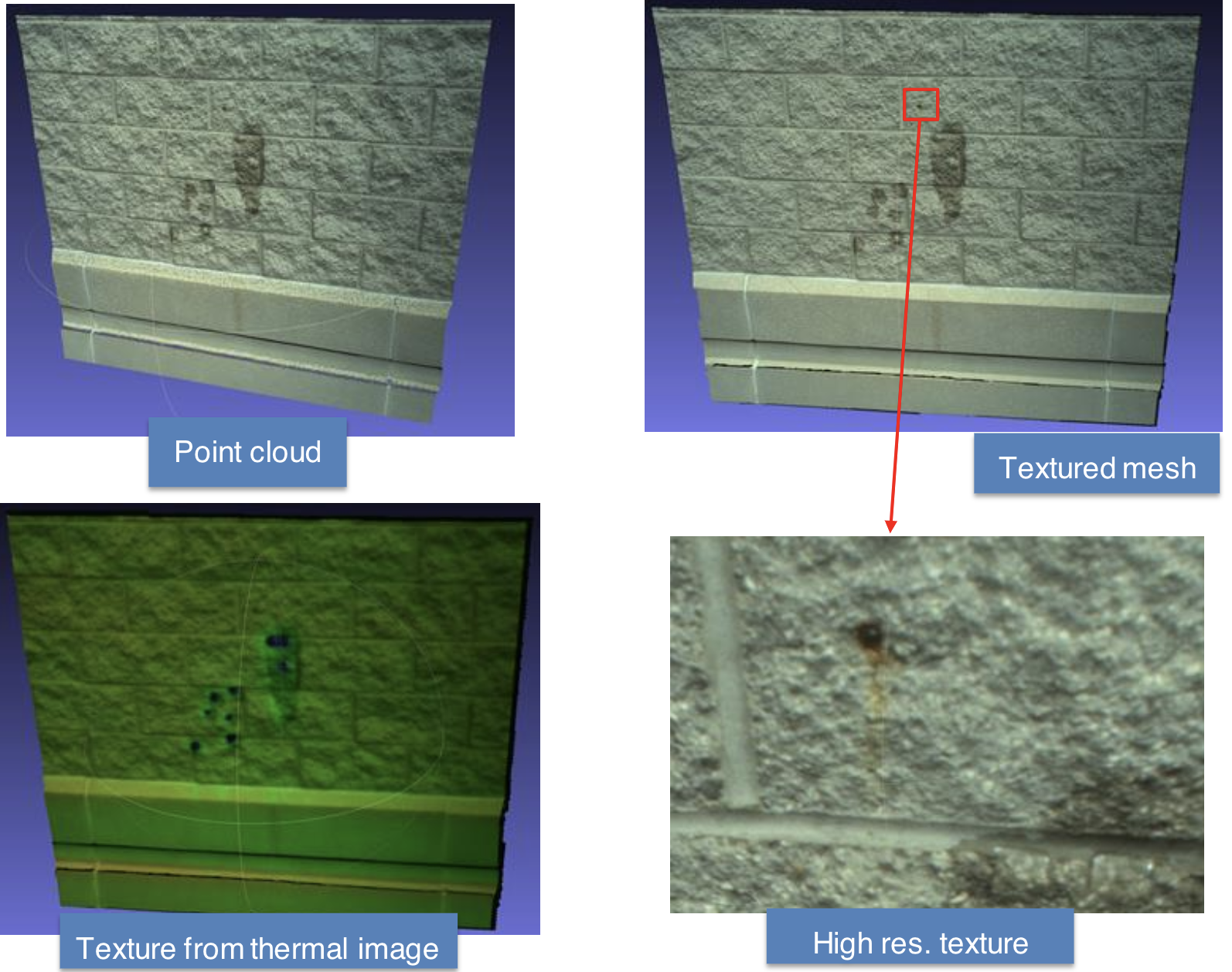

We can then produce the mesh from the point clouds, and then put image and thermal texture onto the mesh as the result.

Results

Here are some results for now:

Credit

Stereo vision algorithms: Yaoyu Hu, http://www.huyaoyu.com/ Thermal texture generation: Ruixuan Liu, https://github.com/waynekyrie